Your First Focus is AI Governance:

Enhancing strategy and mitigating risk

By Lindsey Hershman

AI has the potential to augment work in every industry. Organisations that focus on value and adoption will gain a competitive advantage through operational efficiencies, enhanced experience and revenue generation.

Despite the recent hype regarding Generative AI the field of Artificial Intelligence is not new, it stems back to Alan Turing in 1956. Generative AI has brought the ability to use Large Language Models into the hands of every person with internet connection. Like any new technology Gen AI has immense potential to enhance productivity and disrupt business models. While there is immense opportunity there is also a significant risk to corporations. Those organisations that fail to set up proper foundations, run the risk that AI runs amok with inaccuracy, security, privacy and data protection issues. Ultimately if these risks are not addressed up front there will be an inability to scale any of the much-hyped potential benefits.

What can you do to ensure you are set up for success?

AI Governance establishes the principles, policies, and procedures for the responsible development, deployment, and use of AI within organisation. Developing a robust AI Governance Framework ensures that AI initiatives align with ethical standards, regulatory requirements, and organisational goals.

There is a significant challenge for organisations as there is no universal agreement on what responsible and safe deployment of AI looks like. AI Access encourages organisations to conduct a readiness assessment upfront to understand their critical gaps as it relates to strategy, risk, data, infrastructure and capability.

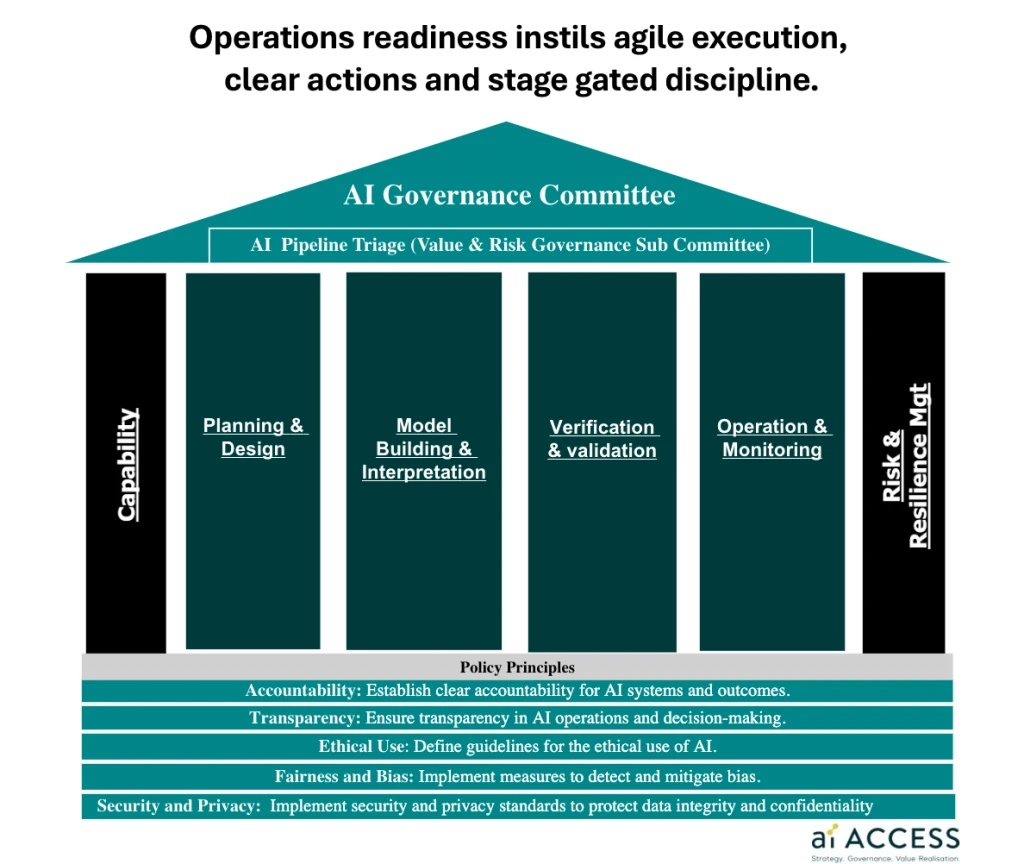

AI Access Managing Director Lindsey Hershman indicates setting the guard rails upfront is critical. “An enterprise AI governance framework should be set out with operational readiness in mind.” The framework needs to consider the role of an AI Governance Committee and the key principles that guide all aspects of AI within the organisation (e.g. accountability, transparency, fairness, explainability, security and safety). The framework should follow the AI system and Algorithm lifecycle to create clarity and guidance of expected actions and evidence requirements throughout the process from planning through to operational deployment. A Governance framework with clear operational readiness requirements can enable a disciplined and stage gated approach creating clarity for the Board, Management and workforce.

What are the key risks of Gen AI?

Given the potential risk to the organisation with Generative AI it is much more important to sharpen the axe before you cut. According to a BCG survey of 1,400+ C Suite Executives across 50 markets 45% of leaders say that they don’t yet have guidance or restrictions on AI and GenAI use at work. When looking at AI, Boards and management must ensure they consider and mitigate the critical risks. These include:

Quality and Accuracy: Generative AI can sometimes produce content that is inaccurate or of lower quality. For example, an AI-generated report might contain factual errors or poorly written text, which could mislead stakeholders or damage the company’s reputation.

Bias and Fairness: AI systems can inherit biases present in their training data, leading to outputs that might be discriminatory or unfair. This is particularly problematic in applications like hiring, customer service, or financial services, where biased decisions can harm the company’s reputation and result in regulatory scrutiny.

Security and Privacy: Generative AI systems can be vulnerable to cyber attacks, where malicious actors could manipulate the AI to produce harmful content or access sensitive data. Ensuring robust security measures and compliance with privacy regulations is crucial to mitigating these risks. Identifying how to leverage Gen AI systems within the confines of your organisation’s data platform and secure environment using your organisation’s data is essential.

What does the regulatory environment look like?

Australia is seeking to take a patient and methodical approach to regulating AI. Today there are currently no specific statutes or regulations in Australia that directly regulate AI. There are several existing regulations that protect corporations, employees and consumers. These regulations include Privacy, IP and data usage, cyber security, workplace health and safety and consumer protection laws. Organisations must pay attention to the emerging regulatory environment. During September 2024 the Australian Government released the Voluntary AI Safety Standard outlining 10 guardrails. These principles are likely to be adopted in mandatory legislation once introduced and should feature in an organisation’s governance framework and operational readiness requirements when considering AI deployment. While the AI safety standard is voluntary (at this point) it is highly recommended to start aligning organisational policy and process to demonstrate compliance with the guardrails.

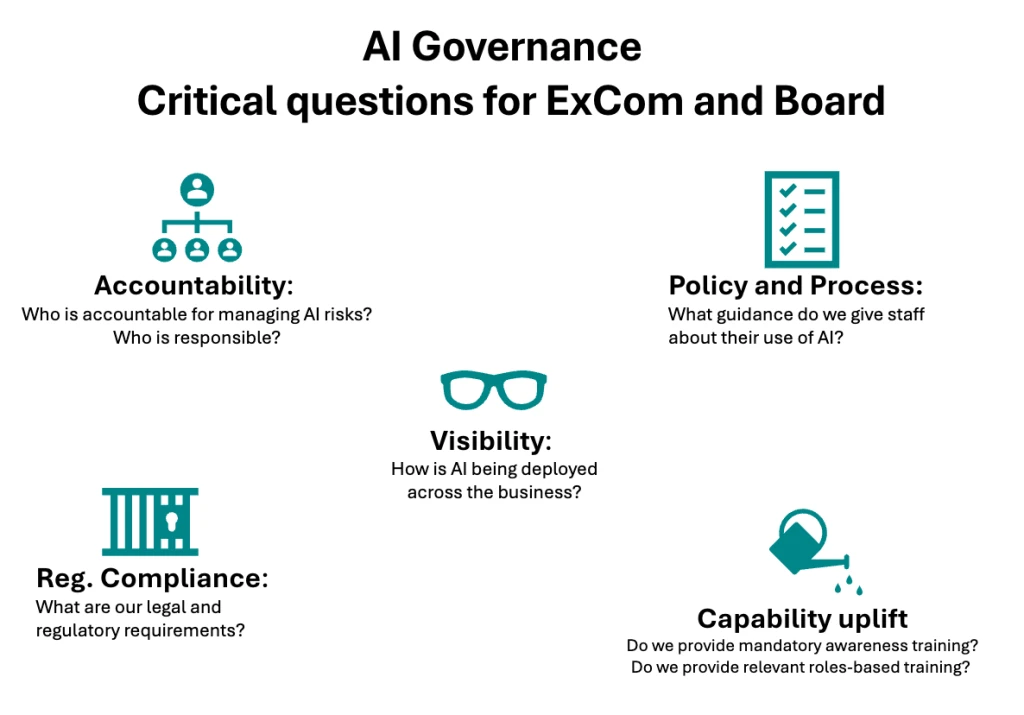

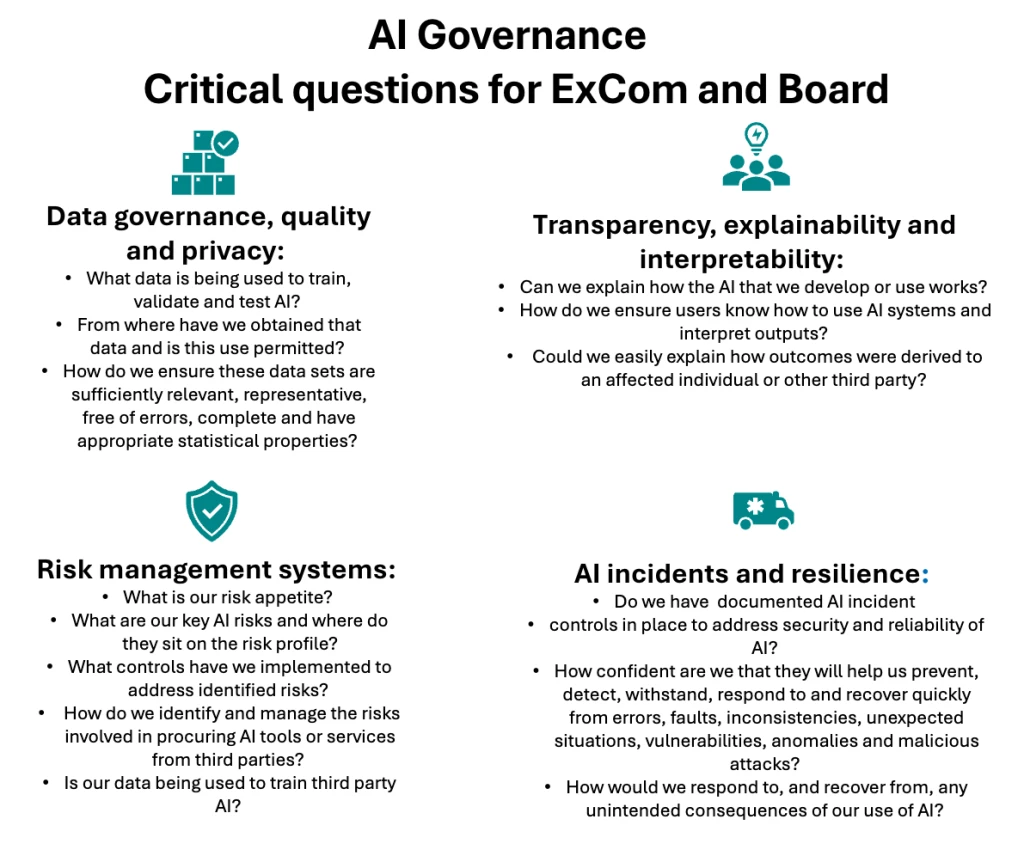

What aspects does your AI Governance framework need to cover?

What questions do you need to consider?

Too often AI Governance frameworks are merely checklists. They are focussed on a senior level audience resulting in ‘shot gun’ governance questioning making it difficult for the workforce to interpret and implement requirements. Secondly this approach makes it difficult to build organisational capability and governance maturity. Given this, an enterprise AI governance framework should be set out with operational readiness in mind. The framework should follow the AI system and Algorithm lifecycle from planning and design, building and development, verification and testing through to operations and monitoring. This methodical approach can assist both the Board and Management to take a disciplined and stage gated approach. This ensures the workforce are clear on the requirements upfront, identifying and demonstrating the evidence required at each stage.

AI/Generative AI offers significant benefits in terms of efficiency and innovation, it also poses various risks that need careful management. The good news is there are positive steps you can take to enhance your ability to leverage AI. This can commence with establishing your AI guardrails upfront and conducting an AI readiness assessment. This approach can assist you to navigate and address the underlying AI risks, maintaining trust and integrity while maximising value.

Written by: Lindsey Hershman, Managing Director of AI Access

ai Access are experts in AI strategy, governance, development, testing and adoption.

ai Access partners with Juicebox to enable organisations to link and integrate their

strategy, technology and people to realise value from AI. For more details visit